TL;DR

Data isn’t just an asset anymore – it’s the foundation of AI success. At Levi9, we’ve spent a decade building data platforms that scale, adapt, and deliver real business value. The lesson? Start with strategy, avoid common pitfalls, and choose platforms that grow with your needs. The right data foundation today determines your AI capabilities tomorrow.

Why This Matters

For years, data projects had a notorious reputation. 80% of them failed. Poor strategy, wrong tooling, disconnected teams. But something fundamental has shifted.

AI changed everything. Suddenly, data quality isn’t just a technical concern, it’s the difference between AI that transforms your business and AI that burns your budget. Levi9 team discovered across dozens of projects: if your AI model eats junk food, it won’t perform as you wish.

The Foundation: Data Strategy

Before choosing platforms or hiring data scientists, you need clarity. A data strategy isn’t a document that sits on a shelf, it’s your roadmap to value.

“Think up front before you start. What are the business goals your data project needs to support? You need clearly defined business goals and a way to measure them. That ensures future success.”

Every successful data strategy stands on four pillars:

1. Business Alignment

Align your business goals with your data strategy from day one. Data for data’s sake is expensive theatre. Data that drives decisions is transformative.

2. Data Governance

Standards, security, compliance. These aren’t afterthoughts. In the age of GDPR and the AI Act, governance protects both your customers and your business.

3. Data Architecture

There’s a plethora of options today: cloud-native, hybrid, multi-cloud. The right architecture depends on your team’s skills, your data sources, and your business needs.

4. Adoption and Execution

The best strategy means nothing if your team can’t execute. Working in agile sprints, we’ve seen how quick adjustments prevent expensive mistakes. When you discover something needs adjustment, you’re not too far gone to pivot.

The Pitfalls That Still Catch Companies

Even with a solid strategy, traps await. We’ve seen them firsthand:

Tool-First Thinking

“I saw this fancy platform, it can do everything, let’s use it.” This is how you end up with a €7 million platform nobody uses. Tools should solve problems, not create them.

Lack of Governance

Who owns the data? Who manages it? Without clear roles—data stewards, chief data officers—your data becomes fragmented and unreliable. Low trust follows, then reports get ignored.

Ignoring Data Quality

Data engineers focus on structure and format, but business impact? That’s often an afterthought. Miscommunication across teams leads to semantic confusion, and suddenly your reports aren’t trusted.

“It’s very tough to gain trust but very easy to lose it. Use data contracts. Detect anomalies early. Make quality everyone’s responsibility.”

Over-Trusting AI

AI is powerful, but it’s not infallible. Treat it as a copilot, not an autopilot. If you don’t validate results, you get manual errors automated at scale. When compliance audits come, “the model did it” isn’t a defense.

The Re-Ingest Tax

Change your architecture mid-flight, and you’ll pay dearly. Re-ingesting massive datasets costs time and money. Get the foundation right the first time.

What's New on the Horizon

The data landscape is evolving faster than ever. Here’s what matters now:

Real-Time Everything

Batch processing served us well for decades, but waiting hours or days for insights? That’s over. Real-time data streams let you act immediately, whether it’s personalizing user experiences or catching fraud as it happens.

Platforms like Databricks (with Delta Live Tables and Structured Streaming) and Snowflake (with Snowpipe) make real-time processing scalable and cost-efficient. Even AWS Kinesis offers native solutions for streaming data.

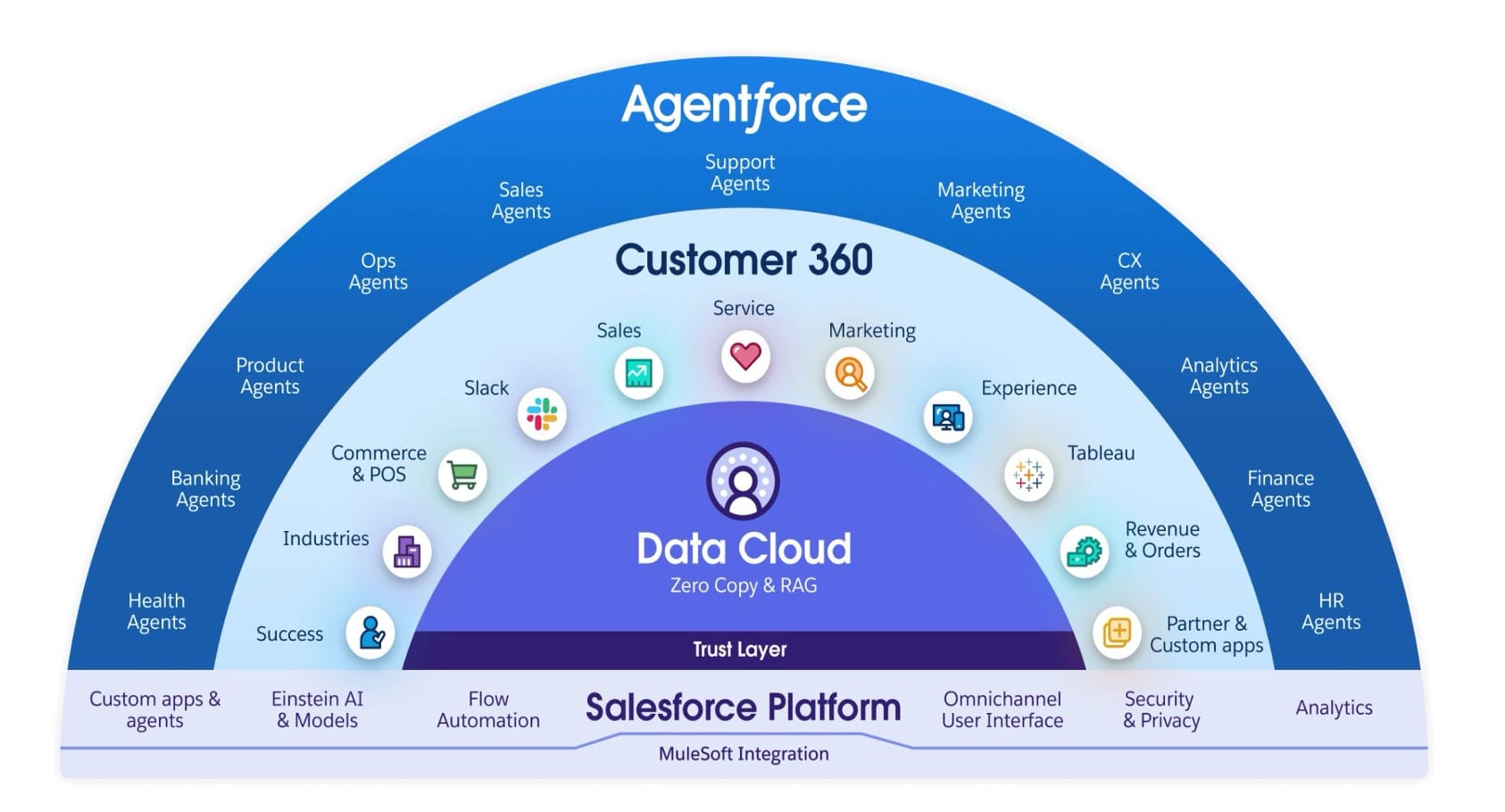

Contextual AI

AI doesn’t just see your data anymore – it understands how that data impacts your business. Take user behavior on a website: AI tracks searches, clicks, past purchases, and delivers personalized results instantly. The smarter your AI, the more it needs deep integration with your data platform.

AI Democratization

Text-to-SQL and natural language interfaces mean business owners can query data directly. No more waiting weeks for a report. Databricks Genie and Snowflake Cortex let non-technical users ask questions in plain language and get answers instantly.

“Data becomes more accessible to business owners. Your organization needs to be ready to act on insights immediately—not take another week to decide.”

Choosing Your Platform: Databricks, Snowflake, or Cloud-Native?

The million-dollar question. And yes, the answer really is – it depends.

When to Choose Databricks

You need real-time processing, AI-driven insights, and you have data engineers on staff. Databricks offers a complete machine learning ecosystem—train, validate, and deploy models directly on your data. It’s powerful, but it requires expertise.

When to Choose Snowflake

Your team is strong in SQL but light on engineering resources. You need security, compliance, and fast results without heavy maintenance. Snowflake excels at data warehousing and works seamlessly across AWS, Azure, and Google Cloud—perfect for companies with data silos across multiple clouds.

When to Go Cloud-Native (AWS, Azure, GCP)

You’re deeply invested in one cloud ecosystem, or you have specific compliance requirements that demand control at the infrastructure level. Native services like AWS Kinesis, Azure Synapse, or Google BigQuery can be cost-effective if you’re willing to invest in custom integration.

“The universal answer? It depends. And based on your needs, your future needs, we help you pick the right one. Using vendor-agnostic platforms like Databricks or Snowflake means you can run anywhere without heavy re-architecture.”

The Cost Question Nobody Wants to Ask

Let’s talk money. Because at the end of the day, every platform has a price.

The biggest cost? It’s not infrastructure. It’s people. Experts who understand your data, who can maintain the platform, who deliver insights your business actually uses.

“We’ve seen companies invest €7-10 million in platforms that nobody uses. Owning and evolving a platform requires skilled people who can turn data into value.”

Cost Visibility is Critical

One client came to us worried about their data platform spend. They didn’t know if it was worth it. We mapped every dollar to specific functionality using cloud cost management tools and tags. Suddenly, the business owner could see: this feature costs X, this pipeline costs Y. The verdict? The investment was justified. But without visibility, doubt creeps in.

Start Small, Scale Smart

Don’t build for 10 years on day one. Start with a clear business need, prove value, then scale. Use pay-as-you-go models. Chunk work into sprints. Get tangible results in weeks, not months.

How Levi9 Works with Clients

Over two decades, we’ve refined our approach:

Workshops First

We start with a few days on-site. Together, we map your business goals, assess your data maturity, and design an optimal approach. The output? A proposal with predictability—timelines, costs, team structure.

Right-Sized Teams

Most projects start with 4-5 people: architects, data engineers, analysts, and a delivery manager. But it depends. If requirements aren’t clear, we might start with just two architects to define the vision. If you have data silos across departments, we bring in cross-domain architects to establish company-wide principles.

“From zero to production, delivering value to end users? We’ve done it in five sprints—ten weeks.”

Speed to Value

We’ve helped companies onboard engineers to complex data projects in two weeks instead of a year. We’ve implemented Snowflake end-to-end in three months—a record time. Speed matters, but only if the foundation is solid.

What We've Learned

A decade of data projects teaches you a few things:

- Strategy First, Tools Second

Every successful project starts with clarity. Business goals. Data governance. The right skills. Only then do you pick tools.

- Review and Adapt

Data strategy isn’t static. Review it annually at minimum. More often if your business is changing fast—acquiring companies, entering new markets, facing new regulations.

- Cost is About People, Not Just Platforms

Invest in your team. Train them. Empower them. A €1 million platform is worthless if nobody knows how to use it.

- Being Data-Centric Means More Than Collecting Data

Orchestrate strategy, governance, and quality to turn data into business value. That’s the real ROI.

The Future We See

AI will continue to reshape how we interact with data. Real-time insights will become table stakes. Multi-cloud architectures will be the norm, not the exception. And the companies that win? They’ll be the ones who built strong data foundations today.

“Good data foundations today determine your AI success tomorrow. That’s why we’re here—to help you build for the long term.”

In this article:

Kosta Stojakovic

Ihor Kozlov

Nikola Djordjevic